MAKING OFF — Augmented Journalism: An Immersive Experiment in High-Fidelity Storytelling

Beyond the prompt: Using AI as a force multiplier to give a new voice to Lynda Van Devanter’s silent front.

This “Making Off” explores the editorial architecture behind our latest investigation: Blood on the Typewriter.

Rather than a simple behind-the-scenes look, this case study is a reflection on the emergence of Augmented Journalism. We detail how the KAITSA Narrative Engine was used to bridge the gap between cold archives and the raw, sensory reality of the Vietnam War’s forgotten nurses. By documenting our workflow—from autonomous ideation to high-fidelity visual placeholders—we invite you to discover how AI, when steered by human empathy, can deepen the impact of long-form storytelling without ever replacing the journalist’s soul.

[PROCESS NOTE: THE AI ART DIRECTOR]

[Part 1: The Generation]

Readers will notice that structural placeholders—such as descriptions like [PHOTO 1 - FULL BLEED, GRITTY] or layout instructions like [PULL QUOTE - MASSIVE, CENTERED]—have been intentionally preserved in the final text. This was a deliberate choice to document how the KAITSA Narrative Engine generates not just the prose, but the entire article structure, including its visual rhythm.

To create the visuals, the full article was fed to Gemini, acting as the project’s dedicated “Iconographer.” Gemini analyzed the text to generate precise image prompts in the appropriate period style under strict human supervision to maintain narrative consistency.

Visuals: The imagery was co-designed through an iterative brainstorming session. Gemini’s initial proposal included a nurse with a prominent Red Cross armband. While visually striking, I triggered a human-led fact-checking loop to verify its realism.

Visual Fact-Check: In the field, many medics and nurses avoided wearing white armbands with red crosses or other highly visible markings, preferring subdued insignia or removing them completely to avoid drawing enemy fire.

Consequently, I directed the engine to relocate the medical insignia to a background tent instead. This proves that even with a high-end model, human supervision is essential to filter out “hallucinated clichés” and sculpt a historically accurate scene. The final visual reflects this survival tactic, prioritizing realism over iconography.

A striking example of this collaboration appears in Section V (Midnight Oil). Gemini autonomously conceptualized a visual not explicitly detailed in the raw structure: an overhead shot of a bed covered in handwritten testimonies. It established a deep text-image link, describing the scene as a “chaotic emotional puzzle” where a red pen circling a paragraph symbolizes the attempt to organize collective trauma (”I thought it was just me”).

These AI-generated prompts were then executed by Nano Banana Pro to produce the final photographs (synthographies).

Why I Write “Visual Homage”: A Note on Synthetic Ethics

At the bottom of my visuals, you will see an unusually specific credit line:

Scripting: Gemini | Synthography: Nano Banana Pro | Motion Synthesis: Grok

This isn’t technical vanity; it is a declaration of provenance. As a photographer, I believe that crediting a work means taking responsibility for its creation chain. In the synthetic age, authorship is no longer a solo act, but a pipeline:

Scripting (The Intent): The raw idea, structured by an LLM.

Synthography (The Render): The visual output. I refuse to use the word “Photography” here, out of respect for those who capture physical light on a sensor. This is writing with data, not light.

Motion Synthesis (The Movement): The generative animation.

The Question of “Visual Debt”

This transparency imposes a second duty. If I prompt an AI to generate an image “in the style of Don McCullin”—mimicking his grain, his contrast, and his framing of trauma—the AI is not creating ex nihilo. It is retrieving patterns from a body of work that McCullin spent a lifetime building, often at great personal risk.

AI does not have a style; it only has mimicry.

Therefore, when a prompt explicitly invokes a master, the credit line must acknowledge the debt. To omit this would be aesthetic laundering. In the Kaitsa Lab, we credit the source of inspiration with the same rigor as the tool of production.

The final credit line reads:

Scripting: Gemini | Synthography: Nano Banana Pro | Visual Homage: Don McCullin

This is not just courtesy. It is an acknowledgment that without the human eye of the past, the artificial eye of the present would be blind.

[Part 2: The Infographic Case Study – Human-in-the-Loop]

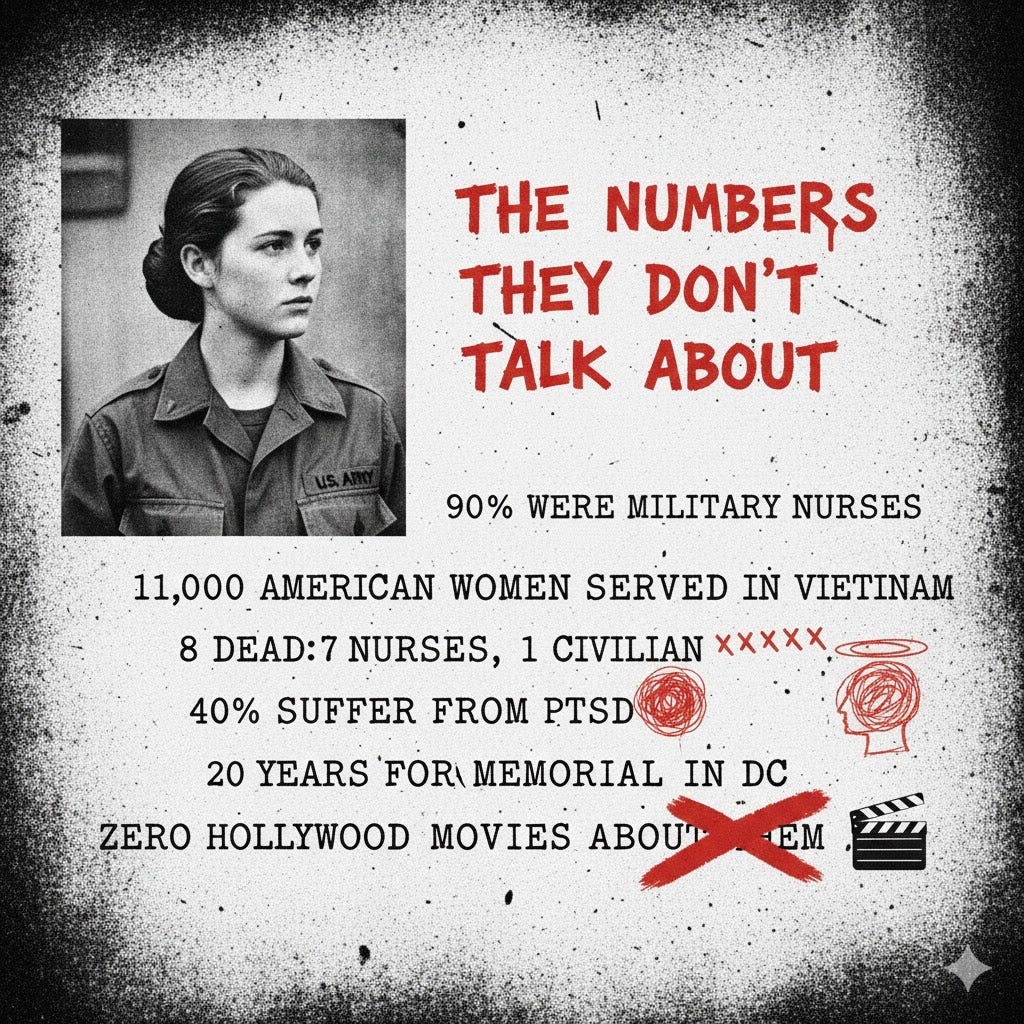

The “THE NUMBERS THEY DON’T TALK ABOUT” visual followed a rigorous evolution:

Aesthetic Alignment: We chose a 2010s-era Gonzo aesthetic (Xerox textures, dripping stencil) to evoke an urgent, underground zine feel.

Historical Fact-Checking: Initial AI drafts mistakenly used modern digital camouflage. Through Human-in-the-Loop (HITL) intervention, we corrected this to the authentic OG-107 Olive Drab Jungle Fatigues and the strict 1968 military grooming standards.

Visual Symbolism: We translated raw stats into emotional anchors, using hand-drawn red crosses and a slashed-out film clapboard to symbolize the “Hollywood blackout” of these stories.

[Part 3: The Real-World Application]

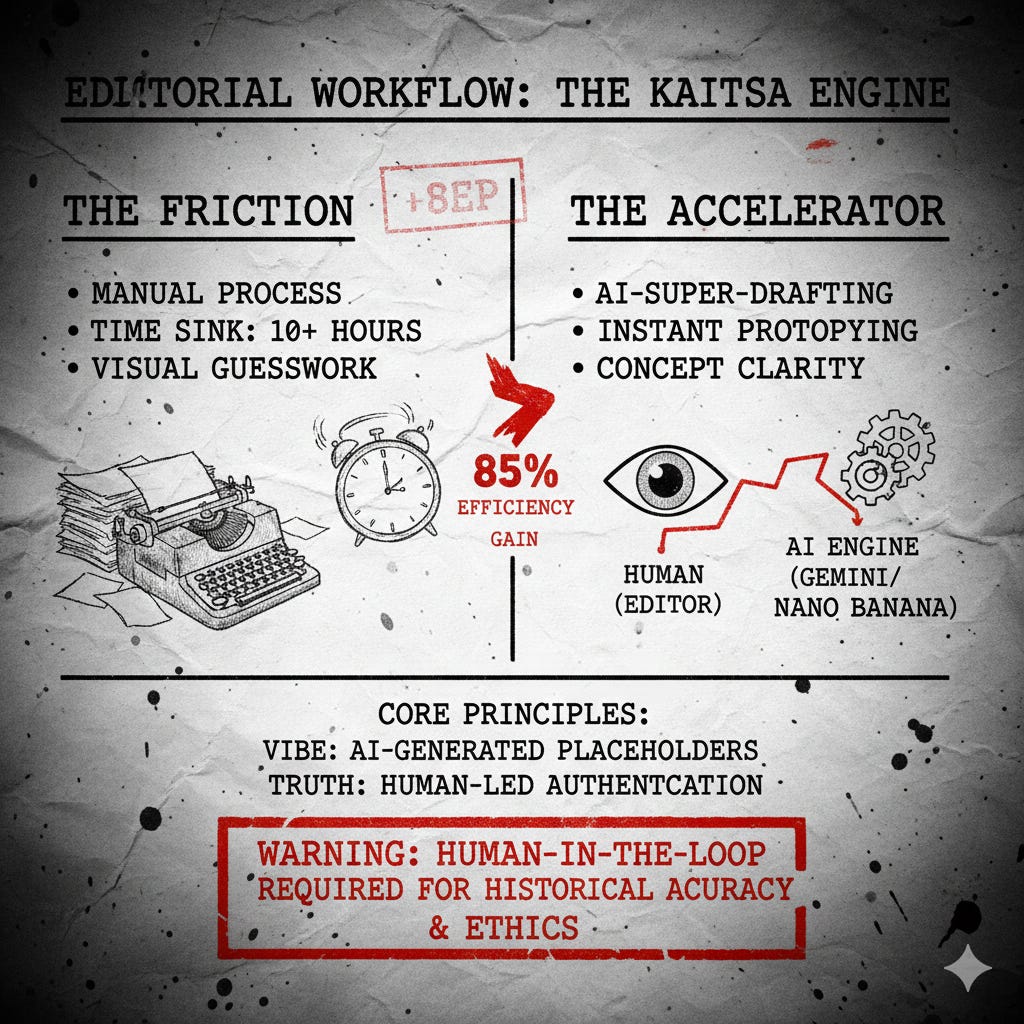

It is crucial to understand the ultimate purpose of this simulation. In an active newsroom, this AI-generated workflow acts as a “Super-Drafting” tool.

Note on Aesthetic Versatility: > "While this specific investigation adopts a Gonzo-inspired visual style to match the raw, underground tone of the Vietnam archives, the KAITSA Engine is stylistically agnostic. Had this been a New Yorker-style long-read, the AI Art Direction would have pivoted instantly toward minimalist watercolors or architectural 'Ligne Claire' illustrations. The technology doesn't dictate the aesthetic; it executes the Editor's vision with chameleon-like precision."

The AI images and curated soundtracks presented here function as high-fidelity placeholders. They allow the Editor-in-Chief to visualize the final “look, feel, and sound” of the story instantly, validating the graphical direction and narrative tone before a single euro is spent on licensing or production.

In a real-world publication scenario, the workflow would shift back to the human team for the final mile:

Authentication: AI-generated portraits are replaced by actual historical archival photos (e.g., Lynda Van Devanter).

Validation: The human Editor-in-Chief refines the editorial nuances and verifies every historical detail.

The Guardrail: AI provides the Vibe; Humans provide the Truth.

Rights Management: Final music selections and images are formally licensed through the appropriate agencies.

The KAITSA Engine demonstrates that AI is an accelerator for editorial vision, not a substitute for historical reality or human judgment.

[Part 4:The Workflow Revolution: Traditional vs. KAITSA]

1. Analysis

Traditional: Hours of manual transcription and thematic coding.

KAITSA Workflow: Instant Synthesis. Real-time thematic extraction and “metier” titling (The Iconographer, The Archivist).

2. Conceptualization

Traditional: Multiple brainstorming meetings to find the “angle.”

KAITSA Workflow: Autonomous Ideation. AI identifies non-obvious visual links (e.g., the “chaotic emotional puzzle”).

3. Visual Drafting

Traditional: Briefing photographers/designers; waiting days for mock-ups.

KAITSA Workflow: High-Fidelity Placeholders. Instant generation of “look & feel” using Nano Banana Pro.

4. Historical Check

Traditional: Manual verification of details (uniforms, dates, facts).

KAITSA Workflow: Human-in-the-Loop (HITL). Human corrects AI anachronisms (e.g., swapping digital camo for OG-107).

5. Final Production

Traditional: Licensing archival photos and final typesetting.

KAITSA Workflow: Validated Precision. The “final mile” focuses exclusively on authentication and licensing real history.

In short, AI doesn't replace the journalist; it acts as a force multiplier, automating tedious tasks to restore their most precious asset: the time needed for deep field investigation, empathy, and rigorous fact-checking.The Ethical Warning

While the KAITSA Engine accelerates vision, we acknowledge the following Critical Warnings:

The Hallucination Risk: AI prioritizes “Aesthetic over Accuracy.” Human veto is mandatory to correct anachronisms (e.g., modern camo on 1960s nurses).

The Transparency Mandate: To dismantle the “Deepfake Trap,” vague labels are not enough. At Kaitsa Lab, we enforce Immediate Granular Disclosure. From the very first Mock-Up stage, we explicitly credit every specific AI model involved in the chain to prevent any confusion between synthetic visualizations and authentic archives.

Example of a standard Kaitsa Credit Block for a visual mock-up: [ Scripting: Gemini | Synthography: Nano Banana Pro | Motion Synthesis: Grok | Visual Homage: Don McCullin ]

The Standardisation Threat: Without strong Human curation, AI risks producing a “homogenized” look. KAITSA insists on human-led stylistic pivots.

[Part 5: The Immersive Layer – Augmented Reading & Sonic Narratives]

The KAITSA Narrative Engine doesn’t stop at prose and static imagery. This article utilizes a multi-sensory approach to bridge the gap between 1968 and the reader’s screen:

Video: You will notice short, 6-second videos. These “memory flashes” were generated to give a haunting, living pulse to the archive.

Scripting: Gemini | Synthography: Nano Banana Pro | Motion Synthesis: Grok | Visual Homage: Don McCullin

Aural Anchors: Music is used as a narrative pacer. We recommend launching the specific tracks below at their designated chapters.

The Future: Sonic Narratives: We are exploring the possibility of a Full Audio Experience. Imagine this text read by a synthetic voice with the texture of a 1960s radio broadcast, layered with sound design—the rhythmic clacking of a typewriter merging into the distant thrum of Huey helicopter blades. This would transform the article into a “Cinema for the Ears,” where music and speech-to-text collaborate to tell the story of the Vietnam nurses in high-definition sound.

STRATEGIC SOUNDTRACK (AI MUSIC RECOMMENDATIONS)

Recommended by Gemini (AI) to sync with the article’s emotional beats.

[ ● ▬▬ ● ] MOOD TAPE

The Arrival (Section I)

The Doors – The End | Watch on YouTube

Why: Sets the psychedelic dread of the field hospitals.

The Invisible Front (Section II)

Jefferson Airplane – White Rabbit | Watch on YouTube

Why: Mirrors the surreal absurdity of a combat zone that “didn’t exist.”

The Numbers (Section III – The Infographic)

The Rolling Stones – Gimme Shelter | Watch on YouTube

Why: The urgent energy needed to process the violent reality of the casualties.

The Scars (Section IV)

Alice In Chains – Rooster | Watch on YouTube

Why: A dark, grunge-era reflection on the long-term PTSD of the early 90s.

The Wait (Section V)

Sinead O’Connor – Nothing Compares 2 U | Watch on YouTube

Why: Evokes the raw grief of the 20-year wait for national recognition.

The Fade Out (Conclusion)

Mazzy Star – Fade Into You | Watch on YouTube

Why: A haunting note on how these heroines faded from collective memory.

[SONIC SCRIPT EXAMPLE: SECTION III]

(For those interested in the future of audio-augmented longreads)

[0:00] Ambience: Distant artillery and tropical birds.

[0:05] Mechanical: Heavy typewriter clacking (Clack-clack-DING).

[0:15] Music: Gimme Shelter intro pulses under the narration.

[0:30] SFX: A Huey helicopter blade (thump-thump-thump) cuts through the music at each key statistic.

FEEDBACK REQUEST: Does this “Augmented Reading” format change your perspective? Let us know in the comments.